Automatic text summary – Lincoln, Introduction to Automatic Summary – The Data Blog

A blog on data, artificial intelligence, and my projects

The automatic summary is to take a long text, or even a set of texts, and to automatically generate a much shorter text which contains the majority of information. Simple ? Not that much. First, you have to agree what information is really important. Then, we must be able to extract them properly, reorganize them, all in a grammatical text and without human intervention. And that is without counting on the large number of variants of possible summaries !

Automatic text summary

With the explosion of texture collection and storage, the need to analyze and extract relevant information from this mass is more and more present.

In addition, the boom in Deep Learning models for automatic natural language processing (Taln) facilitated the use of textual data in operational issues. The automatic text summary, in the same way as the Answering question, the similarity analysis, the classification of document and other tasks linked to the Taln are part of these issues.

It is in this context that the Lab Innovation de Lincoln has decided to carry out work on the automatic text summary. These works have made it possible to establish a benchmark of the automatic summary models available for the language French, to cause our own model and finally put it in production.

�� Model training

Data

Before we could start our work, we first had to build a database for learning automatic summary models. We have recovered press items from several French news sites. This base contains ~ 60K articles and is continuously updated.

State of the art

Automatic summary algorithms can be separated into two categories: summaries extractive and summaries abstractive. In the frame extractive, The summaries are built from sentences extracted from the text while the summaries abstractive are generated from new sentences.

Automatic summary models are quite common in English, but they are much less in French.

Metrics

For the evaluation of models we used the following metrics:

RED : Undoubtedly the measurement most often reported in summary tasks, the Recall Oriented Understudy for Gisting Evaluation (Lin, 2004) calculates the number of similar N-Grams between the evaluated summary and the human reference summary.

Meteor: The Metric for Evaluation of Translation With Explicit Ordering (Banerjee and Lavie, 2005) was designed for the evaluation of automatic translation results. It is based on the harmonic average of precision and recall on unigrams, the recall having a weighting greater than precision. Meteor is often used in automatic summary publications (See et al., 2017; Dong et al., 2019), in addition to red.

Novelty: It has been noticed that some abstract models rest too much on extraction (See et al., 2017; Krysci ‘Nski et al.‘, 2018). Therefore, it has become common to measure the percentage of new N-GRAMs produced within the summaries generated.

Source: Translation from MLSUM paper [2].

The deployment of models

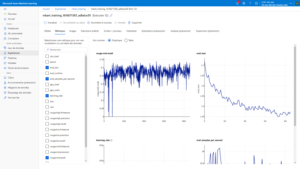

For model training, we used the Cloud Azure ML service which provides a complete environment for training, monitoring and deployment of models.

We have more precisely used the Python SDK which allows you to manage the whole AZUREML environment in a programmatic way, from the launch of “Jobs” to the deployment of models.

However, we encapsulated our final model in a containerized flask application then deployed via CI/CD pipelines on a Kubernetes cluster

The results

First of all, we made several attempts, leading the models on 10k articles, varying the number of tokens given at the start of the model (512 or 1024) and different architectures.

First observation: Red and Meteor metrics do not seem very suitable for the performance assessment of our models. We therefore chose to base our comparisons on the novelty score only and selected architecture favoring more abstractive summaries.

After pushing the training of our model on 700K items, we significantly improved the results and validated a first version that you will find below.

Attention points

Beyond the performance, this experiment allowed us to highlight some boundaries Automatic summary:

Currently, the size of the text in inputs of the type models Transform is limited by the capacity in memory of GPUs. The cost in memory being quadratic with the size of the text as input, this poses a real problem for the tasks of automatic summary where the text to be summarized is often long enough.

It is very difficult to find relevant metrics to assess text generation tasks.

Be careful the weight of the extractor : We have also encountered several problems related to data in themselves. The main problem is that the article of the article was often a paraphrase or even a duplicate of the first sentences of the article. This had the consequence of encouraging our models to be more extractive than abstractive by simply returning the first sentences of the article. It was therefore necessary to do a curation work by deleting the articles posing problem to avoid this kind of bias.

A blog on data, artificial intelligence, and my projects.

The automatic summary is to take a long text, or even a set of texts, and to automatically generate a much shorter text which contains the majority of information. Simple ? Not that much. First, you have to agree what information is really important. Then, we must be able to extract them properly, reorganize them, all in a grammatical text and without human intervention. And that is without counting on the large number of variants of possible summaries !

I was able to work for about a year on this exciting theme just before my doctorate, this post is therefore an opportunity for me to immerse myself in this subject and to take stock of the latest innovations in the domain.

So let’s take an overview of this theme, by creating by describing the different types of summaries that exist, before dwelling on two types of systems slightly in detail: those from AI and neural networks , and those which are rather focused on the optimal extraction of information.

The different types of summary

When we talk about summary, we often think of the back cover of a book or the description of the script for a film. Generally, they avoid spoiling the end, when this is precisely what one would ask for a tool of classic automatic summary: to tell the intrigue, so that the summary may be enough to know the essentials. Here it is about Mono-Document summaries, That is to say that we only summarize a single document (a film, a book, an article, …).

On the contrary, we could want a Multi-documentary summary, that we meet more frequently in the context of press reviews: we want to have a summary of the most important information as reported by various press organizations.

Once we have decided about the type of data that we seek to summarize, mono or multi-documentary, we have the choice between two approaches: theextractive, which consists in extracting as what of the information before putting it back to create a summary, and the approach generative, which consists in creating new sentences, which do not originally appear in the documents, in order to have a more fluid and freer summary.

In addition to these criteria, there are various styles of summaries, which we will not approach here: update summaries which consist in summarizing the information appearing in a new document and which was not listed so far, summarized directed which consist in adopting a precise angle given by the user, ..

AI and neural networks revolutionize the automatic summary

Until the mid -2010s, most of the summaries were extractive. However, great diversity already existed in these algorithms which could range from the selection and extraction of whole sentences to the extraction of precise information recolved then in texts with holes prepared in advance called templates. The arrival of new approaches based on neural networks has considerably changed the situation. These algorithms are much more effective than the previous ones to generate grammatical and fluid text, like what can be done with this GPT demo.

Neural networks, however, require large amounts of data to be trained and are relatively unclothe. They work perfectly to generate comments for which veracity is of little importance, but strongly may generate contradictory or simply incorrect information which is problematic in the context of press articles summaries for example. Many research articles are interested in these “hallucinations” of neural networks.

An example of a hybrid tool: Potara

The automatic summary was the first research subject in which I was interested, and I had the opportunity to develop during my master a hybrid system of summary by extraction/generation for a multi-document approach, that is to say summarize a set of documents speaking of the same subject.

The idea was to start from a classic extraction, namely to identify the most important sentences and assemble them to generate a summary. The problem with this approach is that the most important sentences could often be further improved. For example, in an article speaking of a presidential displacement, the phrase “Emmanuel Macron met his American counterpart and discussed economics” could be improved in “Emmanuel Macron met Joe Biden and discussed economy”. Journalists carefully avoiding rehearsals, we find ourselves frequently confronted with this kind of phenomenon.

To overcome this defect, we can identify similar sentences present in different documents and try to merge them in order to obtain a better sentence. ANSI, from the following two sentences:

- Emmanuel Macron met his American counterpart in Washington and talked about economics at length.

- The French president met Joe Biden and discussed economics.

We can create a short and informative sentence:

- Emmanuel Macron met Joe Biden in Washington and discussed economics.

Several steps are necessary to achieve this result: finding similar sentences, finding the best fusion, checking that fusion is much better than an original sentence. They take part of many technologies: Word2 with neural networks to find similar sentences, co-cccurence graphs to merge them, ILP optimization to select the best mergers.

If you want to see more, Potara is open-source, but has not been maintained for a while. This project had notably served as a showcase when I was released and therefore had documentation, tests, continuous integration, deployment on Pypi, ..

What is a good automatic summary ?

If certain criteria seem obvious and relatively simple to assess (the grammaticality of sentences for example), others are much more complex. Deciding what the most important information of a text is already a very subjective task in itself. Evaluate the fluidity, the right choice of the words used, comes back to publishing work, and let’s not talk about the political orientation that a summary can take !

The new generative models based on neural networks are likely to introduce pejorative judgments or qualifiers (or user -friendly), an effect sought when it comes to generating a film critic, but much less when talking about Program of a presidential candidate !

Automatic summary therefore remains a very active subject in research, and may be for a moment, particularly with regard to the ability to guide the result of the algorithm, precisely towards a particular feeling, a specific style, a Political coloring given. In the industry, he just begins to enter very specific executives (summary of meetings for example).

Presidential 2022: to your data !

3 examples of data projects to be carried out for the 2022 presidential elections.